Over the past few years, researchers have developed numerous robotic systems that can detect when fruits such as strawberries or tomatoes are ripe.

For the most part, such systems work by locating a fruit on the plant and then analyzing its color with a vision system. Having determined that fruit is ripe, a robotic gripper is then used to pick them off the plant.

Now, of course, vision is just one of the ways that human beings determine whether fruit is ready to eat. However, while vision is an important sense, we also rely upon a number of other senses to perform the same task -- notably, smell, touch and hearing.

So it is hardly surprising then that there are a number of folks who are also working to develop systems that can provide an automated alternative to those senses.

Many researchers, for example, are working to develop, perfect and test “electronic noses” that can determine how mature a particular fruit is. Most of these electronic noses use sensor arrays that react to volatile compounds: the adsorption of volatile compounds on the sensor surface causes a physical change of the sensor which can then be detected.

Aside from sniffing fruit, human beings often give their produce a good squeeze to see if it is ripe enough to eat. That is especially true for fruit like avocados and mangos, which we squeeze to determine their hardness or softness.

Now squeezing fruit is a pretty straightforward task for a robot, especially one that might be equipped with capacitive-based pressure sensors on its grippers. Such sensors could be calibrated so that the system they are interfaced to could be able to ascertain the ripeness of a fruit. What is more, they could potentially be used in conjunction with both the aforementioned vision and electronic nose on a future agricultural robotic harvester to great effect.

Lastly of course, let us not forget that some fruits have a characteristic sound when they are ripe, and so it is not uncommon to see some individuals tapping fruits like melons to determine whether they are ready to eat. So it is obvious that by analyzing the sound made by a reverberating melon hit by an actuator, software could tell you whether the melon is ripe or not.

Clearly, while robotic harvesting systems of the future might well deploy a vision system, they might also host a plethora of other sensory devices. For that reason, system integrators in the vision business might do worse than to take a few refresher courses on olfaction, tactile sensing and audio engineering before embarking on any new design!

References:

1. Japanese robots to harvest ripe fruits with super vision and efficiency

2. Send in the robots to pick the ripest fruit

3. Clever robots for crops

4. Intelligent harvesting robot

5. There's an app for that: test how ripe a melon is with iWatermelon for iPhone

More from Vision Systems Design:

1. Vision system helps sort carrots

2. Machine-vision-based inspector sorts oranges and mandarins

3. Hyperspectral imaging sorts blueberries

4. Weeding system uses x-rays to detect tomato stems

5. X-ray imaging checks cherry pits

6. Vision system sorts strawberry plants

7. Neya Systems awarded contract for produce classification

8. Student app helps farmers to measure quality of rice

9. Robotic image-processing system analyzes plant growth

10.Vision system sorts out the plants

Showing posts with label machine vision systems. Show all posts

Showing posts with label machine vision systems. Show all posts

Friday, April 13, 2012

Wednesday, April 4, 2012

A distinct lack of communication

Because of the highly application-specific nature of many vision systems, small to medium sized enterprises must often bring together teams of engineers with highly-specialized knowledge to create new bespoke designs for their customers.

Not only must these individuals have extensive experience in selecting the appropriate vision hardware for the job, but also be able to choose - and use - the appropriate tools to program the system.

Most importantly, however, it is often the mechanical or optical engineer working at such companies who can make or break the design of a new vision system. Working with their hardware and software counterparts, these individuals can make vitally important suggestions as to how test and inspection fixtures should be rigged to optimise the visual inspection processes.

Indeed, as smarter off-the-shelf hardware and sophisticated software relieves engineers from the encumbrance of developing their own bespoke image processing products, it is the optical or mechanical engineer that can often make the ultimate contribution to the success of a project.

Sadly, however, the teams of mechanically-minded individuals employed by such companies often work in isolation, unaware of what sorts of mechanical or optical marvels may have been whipped up by their rivals - or even customers - to address similar issues to the ones that they are working on.

That's hardly surprising, since many of such companies' customers force them to sign lengthy Non Disclosure Agreements (NDAs) before even embarking on the development work. Some customers even purchase the rights to the design after the machine has been built to prevent their rivals from developing similar equipment at their own facilities.

But while this unbridled protectionism is understandable from the customer's perspective, preventing such information from being disseminated in the literature or on the Interweb actually does a complete disservice to the engineers working at the companies that are building the equipment.

That's because, rather than being able to gain any sort of education from reading about how other engineers may have solved similar problems to their own - especially those in the all important field of mechanics and optics - they are effectively trapped in an secluded world where they can only call upon their own experience and ingenuity, which may, or may not be enough for the job.

Is it time then for systems integrators to politely ask their customers to forgo the signing of such NDAs so that such mechanical and optical information can be made more widespread for the benefit of us all? Perhaps it is. But I'd be a fool to think that it will ever happen.

Not only must these individuals have extensive experience in selecting the appropriate vision hardware for the job, but also be able to choose - and use - the appropriate tools to program the system.

Most importantly, however, it is often the mechanical or optical engineer working at such companies who can make or break the design of a new vision system. Working with their hardware and software counterparts, these individuals can make vitally important suggestions as to how test and inspection fixtures should be rigged to optimise the visual inspection processes.

Indeed, as smarter off-the-shelf hardware and sophisticated software relieves engineers from the encumbrance of developing their own bespoke image processing products, it is the optical or mechanical engineer that can often make the ultimate contribution to the success of a project.

Sadly, however, the teams of mechanically-minded individuals employed by such companies often work in isolation, unaware of what sorts of mechanical or optical marvels may have been whipped up by their rivals - or even customers - to address similar issues to the ones that they are working on.

That's hardly surprising, since many of such companies' customers force them to sign lengthy Non Disclosure Agreements (NDAs) before even embarking on the development work. Some customers even purchase the rights to the design after the machine has been built to prevent their rivals from developing similar equipment at their own facilities.

But while this unbridled protectionism is understandable from the customer's perspective, preventing such information from being disseminated in the literature or on the Interweb actually does a complete disservice to the engineers working at the companies that are building the equipment.

That's because, rather than being able to gain any sort of education from reading about how other engineers may have solved similar problems to their own - especially those in the all important field of mechanics and optics - they are effectively trapped in an secluded world where they can only call upon their own experience and ingenuity, which may, or may not be enough for the job.

Is it time then for systems integrators to politely ask their customers to forgo the signing of such NDAs so that such mechanical and optical information can be made more widespread for the benefit of us all? Perhaps it is. But I'd be a fool to think that it will ever happen.

Friday, March 30, 2012

The wonderful world of vision

Earlier in the month, whilst attending an entire day's worth of seminars on 3-D imaging presented by the folks at Stemmer Imaging (Tongham, UK) our European correspondent overheard a couple of CEOs discussing the lack of talented young engineers who were entering the wonderful world of vision systems design.

As you might expect, the CEOs naturally felt that the low salaries offered by many of the Small to Medium Sized Enterprises that build vision systems for large OEM customers had a lot to do with it.

They believed that the first class engineering talent - in the UK at least - was still being attracted away from the engineering profession to enter less satisfying, yet ultimately more rewarding fields, such as investment banking.

While there may be some truth to that statement, I can't help but think that there's more to it than that. I think that the real problem is that manufacturers just haven't done much at all to make products that are affordable enough to allow children to experiment with hardware and software at an early age.

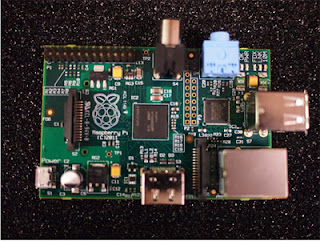

Well, I'm pleased to see that there are some folks out there who agree with me. More specifically, the folks at a Cambridge University (Cambridge, UK) based spinout called Raspberry Pi. The clever folks there have developed and are now selling a small $25 Arm (Cambridge, UK) based computer that into a TV and a keyboard and can be used by children (and interested adults!) as a means to learn programming.

The idea behind the tiny and cheap computer for kids came in 2006, when Eben Upton and his colleagues at the University of Cambridge’s Computer Laboratory (Cambridge, UK), including Rob Mullins, Jack Lang and Alan Mycroft, became concerned about the year-on-year decline in the numbers and skills levels of the A-Level students applying to read Computer Science in each academic year.

By 2008, processors designed for mobile devices were becoming more affordable, and powerful enough to deliver multimedia content, a feature the team felt would make the board desirable to kids who wouldn't initially be interested in a purely programming-oriented device.

Eben (now a chip architect at Broadcom), Rob, Jack and Alan, then teamed up with Pete Lomas at Norcott Technologies, and David Braben, co-author of the seminal BBC Micro game Elite, to form the Raspberry Pi Foundation, and the credit-card sized device was born.

This is a terrific idea and one which some of our esteemed colleagues who market both hardware and software for the vision systems business might like to consider emulating. What better way to encourage those young programmers to get hooked on vision systems design at an early age.

As you might expect, the CEOs naturally felt that the low salaries offered by many of the Small to Medium Sized Enterprises that build vision systems for large OEM customers had a lot to do with it.

They believed that the first class engineering talent - in the UK at least - was still being attracted away from the engineering profession to enter less satisfying, yet ultimately more rewarding fields, such as investment banking.

While there may be some truth to that statement, I can't help but think that there's more to it than that. I think that the real problem is that manufacturers just haven't done much at all to make products that are affordable enough to allow children to experiment with hardware and software at an early age.

Well, I'm pleased to see that there are some folks out there who agree with me. More specifically, the folks at a Cambridge University (Cambridge, UK) based spinout called Raspberry Pi. The clever folks there have developed and are now selling a small $25 Arm (Cambridge, UK) based computer that into a TV and a keyboard and can be used by children (and interested adults!) as a means to learn programming.

The idea behind the tiny and cheap computer for kids came in 2006, when Eben Upton and his colleagues at the University of Cambridge’s Computer Laboratory (Cambridge, UK), including Rob Mullins, Jack Lang and Alan Mycroft, became concerned about the year-on-year decline in the numbers and skills levels of the A-Level students applying to read Computer Science in each academic year.

By 2008, processors designed for mobile devices were becoming more affordable, and powerful enough to deliver multimedia content, a feature the team felt would make the board desirable to kids who wouldn't initially be interested in a purely programming-oriented device.

Eben (now a chip architect at Broadcom), Rob, Jack and Alan, then teamed up with Pete Lomas at Norcott Technologies, and David Braben, co-author of the seminal BBC Micro game Elite, to form the Raspberry Pi Foundation, and the credit-card sized device was born.

This is a terrific idea and one which some of our esteemed colleagues who market both hardware and software for the vision systems business might like to consider emulating. What better way to encourage those young programmers to get hooked on vision systems design at an early age.

Friday, October 7, 2011

Software simplifies system specification

National Instruments' NI Week in Austin, TX was a great chance to learn how designers of vision-based systems used the company's LabVIEW graphical programming software to ease the burden of software development.

But as useful as such software is, I couldn't help but think that it doesn't come close to addressing the bigger issues faced by system developers at a much higher, more abstract level.

You see, defining the exact nature of any inspection problem is the most taxing issue that system integrators face. And only when that has been done can they set to work choosing the lighting, the cameras, and the computer, and writing the software that is up to the task.

It's obvious, then, that software like LabVIEW only helps tackle one small part of this problem. But imagine if it could also select the hardware, based simply on a higher-level description of an inspection task. And then optimally partition the software application across such hardware.

From chatting to the NI folks in Texas, I got the feeling that I'm not alone in thinking that this is the way forward. I think they do, too. But it'll probably be a while before we see a LabVIEW-style product emerge into the market with that kind of functionality built in.

In the meantime, be sure to check out our October issue (coming online soon!) to see how one of NI's existing partners -- Coleman Technologies -- has used the LabVIEW software development environment to create software for a system that can rapidly inspect dinnerware for flaws.

Needless to say, the National Instruments software didn't choose the hardware for the system. But perhaps we will be writing an article about how it could do so in the next few years.

But as useful as such software is, I couldn't help but think that it doesn't come close to addressing the bigger issues faced by system developers at a much higher, more abstract level.

You see, defining the exact nature of any inspection problem is the most taxing issue that system integrators face. And only when that has been done can they set to work choosing the lighting, the cameras, and the computer, and writing the software that is up to the task.

It's obvious, then, that software like LabVIEW only helps tackle one small part of this problem. But imagine if it could also select the hardware, based simply on a higher-level description of an inspection task. And then optimally partition the software application across such hardware.

From chatting to the NI folks in Texas, I got the feeling that I'm not alone in thinking that this is the way forward. I think they do, too. But it'll probably be a while before we see a LabVIEW-style product emerge into the market with that kind of functionality built in.

In the meantime, be sure to check out our October issue (coming online soon!) to see how one of NI's existing partners -- Coleman Technologies -- has used the LabVIEW software development environment to create software for a system that can rapidly inspect dinnerware for flaws.

Needless to say, the National Instruments software didn't choose the hardware for the system. But perhaps we will be writing an article about how it could do so in the next few years.

Subscribe to:

Posts (Atom)